Time Evolution Split Operator Method

When cleaning my apartment, sometimes I just grab the nearest dirty thing to me and try to do something to it. But that is not the most efficient way to get things done. If I’m planning, I’ll first dedicate my attention to one problem, like putting clothing away, then rotate my attention to something else, like dirty dishes. I can keep focused on just one task and do it well. Each problem I solve optimally in shorter intervals instead of tackling everything at once.

That same principle applies to solving partial differential equations. Splitting Methods in Communication, Imaging, Science, and Engineering called this principle one of the big ideas of numerical computation. In numerics, we call it Strang splitting.

We will be applying Strang splitting to solve the Schrondinger equation, but people use the same idea to a variety of problems, like ones with different timescales, length scales, or physical processes. We will be using it to separate out terms diagonal in position space from terms diagonal in momentum space.

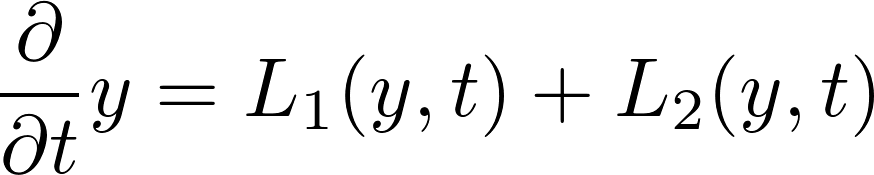

We can describe a class of general partial differential equations by

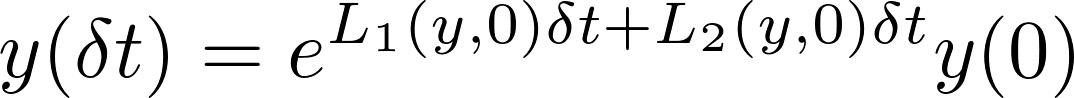

Over a small time step, the following approximation holds

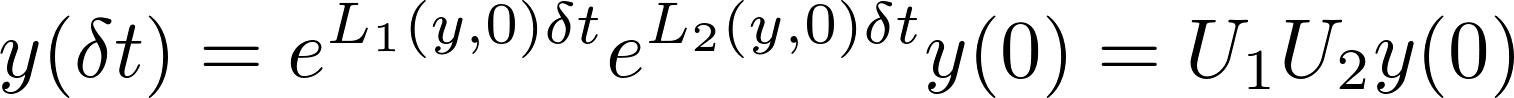

For Strang splitting, instead of applying both operators together, we break them up into two. I’ll discuss non-commutivity later.

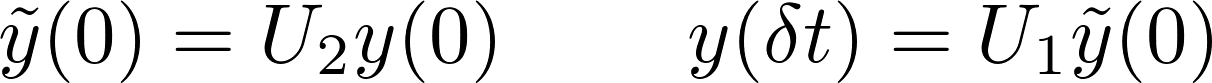

$U_1$ and $U_2$ are evolution operators. We can define

$U_1$ and $U_2$ are evolution operators. We can define

Applying to Quantum Mechanics

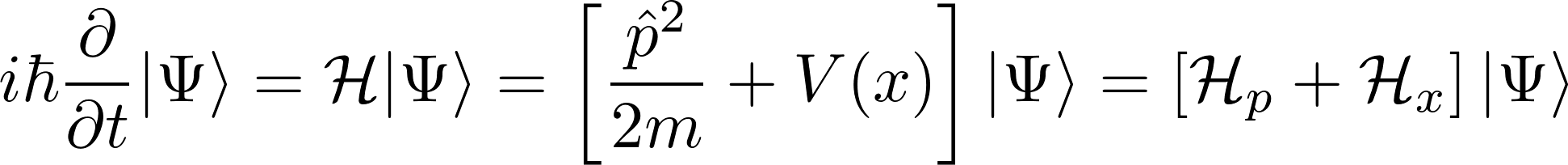

Now let’s take a look at the Schrodinger Equation:

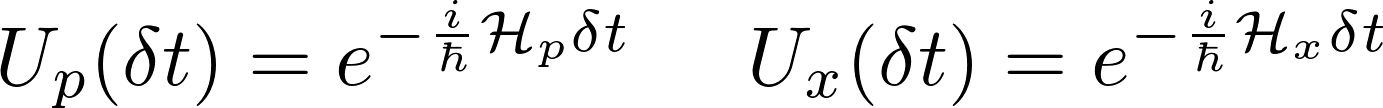

The Hamiltonian gets seperated into position terms and momentum terms. For ease, let’s define our unitary evolution operators,

The Hamiltonian gets seperated into position terms and momentum terms. For ease, let’s define our unitary evolution operators,

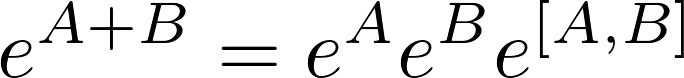

I mentioned earlier that I would discuss non-communitvity. We need to do that now. We can’t simply seperate the evolution operator for the full Hamiltonian into two parts, because we would introduce terms proportional to the commutator.

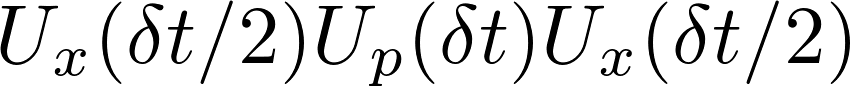

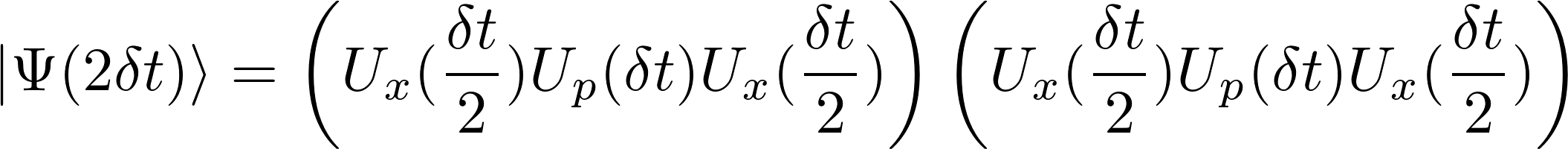

$e^{A+B}$ expanded has terms that look like $AB$ and $BA$, whereas $e^{A}e^{B}$ only has terms that look like $AB$. We lose the symmetry of the expression. We can gain back an order of accuracy by symmetrizing our formula, calculating a time step by

But the next step will the start with $U_x (\delta t/2)$ !

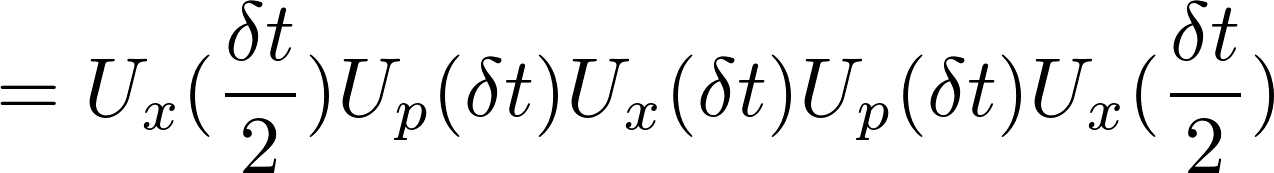

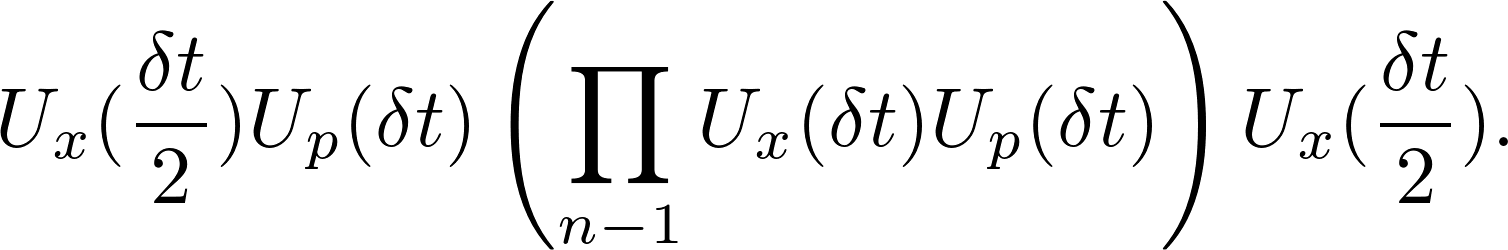

All we need to do to add an order of accuracy is start the simulation with $U_x(\delta t/2)$ and end it with $U_x(\delta t/2)$, leaving everything else the same. Pretty remarkable you can get that much of an improvement for that little work. Once we apply this to a bunch of time steps, we get

We have to apply a few operators before starting the loop. Between the loop and a measurement, we have to apply an additional operator.

In the spatial domain, the momentum operator involves derivatives and is rather icky. But in the momentum domain, we only have to multiply by $k^2/2m$. Thanks to some nicely optimized libraries, we can just transform into the momentum domain with fft, solve the momentum problem there, and transform back with ifft.

Rabi Oscillations

To demonstrate time evolution in a simple system with interesting physics, I chose to apply the split operator to Rabi Oscillations between two harmonic oscillators.

To get an idea of what will happen, we will use a qualitative model of two states weakly coupled to each other by a parameter $\epsilon$. If we have the two minima sufficifiently seperated from each other, tunneling will happen slowly and will not significantly affect the shape of the eigenfunctions and their energies $E_0$. Instead of of solving for the shape of the wavefunction, we solve a two-state Hamiltonian that looks like this,

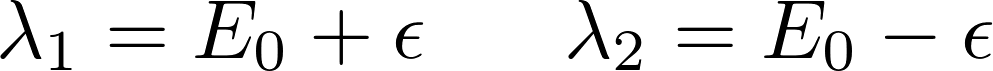

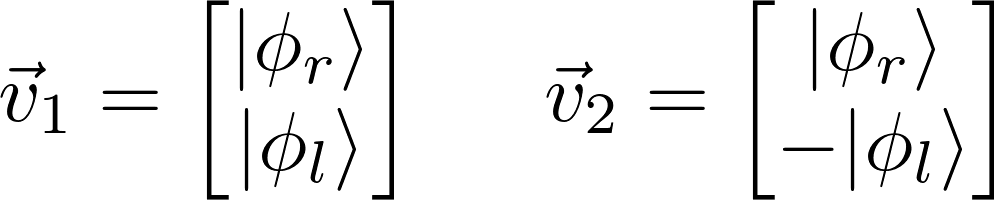

The eigenvalues and corresponding eigenvectors of the matrix are,

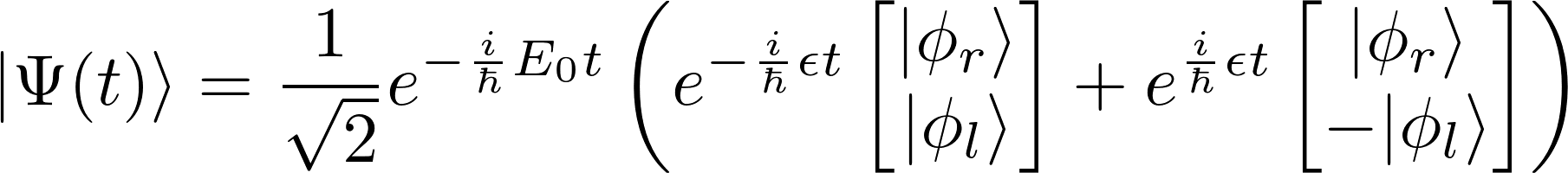

If a wavefunction starts purely in the right state, we want to choose a combination of our eigenvectors that sets the left state to zero at $t=0$. The resulting wavefunction will evolve as,

Thus this simple phenomological model shows us how we can expect the wavefunction to move back and forth between the two wells in a cyclical manner, with a period proportional to the rate of tunneling.

Packages

For the first time, I’m using Plots.jl, with PlotlyJS as the backend for Plots. I had to coax my computer a bit to get the packages to work, but the errors were specific to my computer. I choose to switch as I believe the PlotlyJS output will provide a better expierence for those viewing the GitHub-Pages static site, though it might cause more trouble for anyone using the jupyter notebooks. If you are having trouble plotting yourself, I recommend just switching back to whatever package is easiest for you.

I used Plots to generate a gif for me directly, but I found my old method of generating a file of .png files and then using ffmpeg from the command line much fast and easier.

using Plots

plotlyjs()

#using Rsvg

#using PyPlot # if you easiest for you

Input Parameters

# Set Time Parameters

t0=0

tf=40000

dt=.1

# Set Space Grid Parameters

dx=.1

xmax=8

# xmin will be -xmax. Making the situation symmetric

# How far seperated are the potential minima

seperation=6;

# minima at seperation/2 and -seperation/2

# How often we measure occupation and view the state

nmeasure=1000;

Automatic Evaluation Parameters

Given the parameters above, we can calculate the following variables that we will use in the code.

Note: k Gave me a bit of a headache. The algorithm depends quite a bit on the conventions fft decides to use ;(

Currently, I’m using odd N. You’ll have to change the formula if you use even N.

t=collect(t0:dt:tf)

x=collect(-xmax:dx:xmax)

nt=length(t)

N=length(x)

k = [ collect(0:((N-1)/2)) ; collect(-(N-1)/2:-1) ] *2*π/(N*dx);

occupation=zeros(Complex{Float64},floor(Int,nt/nmeasure),2);

The Potentials and Evolution Operators

Vx=.5*(abs(x)-seperation/2).^2;

Vk=k.^2/2

Uxh=exp(-im*Vx*dt/2);

Ux=exp(-im*Vx*dt);

Uf=exp(-im*Vk*dt);

"potentials and evolvers defined"

plot(x, Vx)

plot!(xlabel="x", ylabel="V",

plot_title="Double Well Potential")

The Unperturbed Wavefunctions

The ground state for a harmonic oscillator is a Gaussian \begin{equation} \langle x | \phi \rangle= \phi (x) = \frac{1}{\pi^{1/4}} e^{-\frac{x^2}{2}} \end{equation} We assume $\omega = \hbar = m = 1$ for sake of convenience.

ϕ(x)=π^(-.25)*exp(-x.^2/2)

ϕl=ϕ(x+seperation/2);

ϕr=ϕ(x-seperation/2);

Ψ0=convert(Array{Complex{Float64},1},ϕl);

plot(x,ϕl,label="ϕl")

plot!(x,ϕr,label="ϕr")

plot!(xlabel="x", ylabel="ϕ",

plot_title="Left and Right Wavefunctions")

FFT’s

This algorithm runs a large number of Fast Fourier Transforms and Inverse Fast Fourier Transforms. To speed the process, we can tell the computer to spend some time, in the beginning, allocating the right amount of space and optimizing the routine for the particular size and type of array we are passing it.

The next cell does this, by using plan_fft and plan_ifft to generate objects that can act on our arrays as operators.

ft=plan_fft(Ψ0);

Ψf=ft*Ψ0;

ift=plan_ifft(Ψf);

Occupancy of each state

To measure the occupancy of the total wavefunction in either the left or right well groundstate, I compute the value \begin{equation} c_r=\langle \Psi | \phi_r \rangle = \int \Psi^* (x) \phi_r(x) dx \;\;\;\;\;\;\; p_r=c_r^2 \end{equation} \begin{equation} c_l = \langle \Psi | \phi_l \rangle = \int \Psi^* (x) \phi_l (x) dx\;\;\;\;\;\;\; p_l = c_l^2 \end{equation} The probability of being in the state is the coefficient squared.

Though in theory these values will always be real, numerical errors introduce some errors, and Julia will assume that the answer is complex. Therefore, we need to apply abs to make the numbers Float64 instead of Complex{Float64}.

nmeas=1000

c=zeros(Float64,floor(Int,nt/nmeas),2);

# Uncomment the # lines to generate a gif. Note: It takes a long time

Ψ=Ψ0;

jj=1

# The operators we have to start off with

Ψ=Ψ.*Uxh

Psif=ft*Ψ

Psif=Psif.*Uf

Ψ=ift*Psif

#@gif for ii in 1:nt

for ii in 1:nt

Ψ=Ψ.*Ux

Psif=ft*Ψ

Psif=Psif.*Uf

Ψ=ift*Psif

if ii%nmeas == 0

# Every time we measure, we have to finish with a U_x half time step

Ψt=Ψ.*Uxh

c[jj,1]=abs(sum( conj(Ψt).*ϕl )) *dx

c[jj,2]=abs(sum( conj(Ψt).*ϕr )) *dx

jj+=1

end

#plot(x[21:141],Vx[21:141]/6, label="Vx scaled")

#plot!(x,abs(conj(Ψt).*Ψt), label="Wavefunction")

#plot!(xlabel="x", ylabel="Ψ",

# plot_title="Wavefunction evolution")

end

#end every nmeas

Ψ=Ψ.*Uxh;

Time Evolution in a double well potential. This gif was generated by `Plots.jl`

Time Evolution in a double well potential. This gif was generated splicing together PyPlot png's.

plot(c[:,1].^2,label="Left Prob")

plot!(c[:,2].^2, label="Right Prob")

plot!(xlabel="x",ylabel="Probability",

plot_title="Rabi Oscillations for a Double Harmonic Oscillator")

The faster one can perform Fourier Transforms, the faster one can carry out this algorithm. Therefore, scientists, such as [2] and [3], will use multiple cores or GPU’s.

In addition to real-time evolution, algorithms like this can determine the ground state of an arbitrary system by imaginary time evolution. Soon, I will take the work covered here and look at this aspect of the algorithm.

I’d particularly like to thank Dr. Lee J. O’Riordan [3] for sharing his experience on this topic.

[1] Glowinski, Roland, Stanley J. Osher, and Wotao Yin, eds. Splitting Methods in Communication, Imaging, Science, and Engineering. Springer, 2017.

[2] Heiko Bauke and Christoph H. Keitel. Accelerating the Fourier split operator method via graphics processing unit. Computer Physics Communications, 182(12):2454–2463 (2011)

[3] Lee James O’Riordan et al., GPUE: Phasegineering release, Zenodo. (2016) https://github.com/mlxd/GPUE DOI:10.5281/zenodo.57968

| Qunatum Time Evolution | |||

|---|---|---|---|

| # | Title | Level | Tags |

| 1 | Quantum Harmonic Oscillator |

||

| 2 | Time Evolution Spit Operator Method |

||

| 3 | Ground States: Imaginary Time Evolution |

||